Abstract

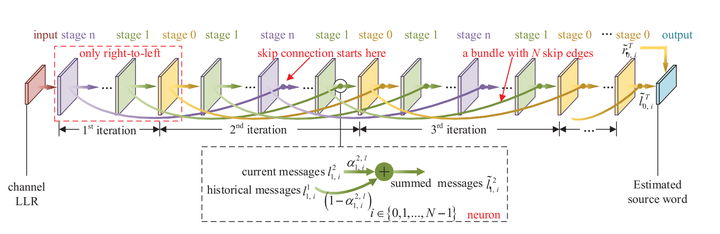

The polar neural decoder has a performance improvement at the cost of additional storage and computation. In this letter, we first propose a ResNet-like BP architecture to improve the standard polar BP decoding. Each iteration inherits a portion of information from the previous iteration as additional input and passes its output to the next iteration. Second, trainable damping factors, which can be learned through deep learning techniques, are applied to determine the inheritance ratio in the ResNet-like BP. We allocate the damping factors flexibly and demonstrate the optimization of a shared constant damping factor provides the same level of performance with the individual optimization of the damping factor at each state. Moreover, we qualitatively explain the performance gain by analyzing the mutual information between the input and output of the decoder. Numerical and simulation results indicate that the ResNet-like BP decoder can effectively improve the error correction performance and accelerate convergence compared with the standard polar BP algorithm.